High steaks: why young men are eating more meat, and what it means for health, masculinity, and the planet

The Protein Paradox: When Meat Meets Masculinity

While the UK has steadily reduced its meat consumption over the past two decades, a surprising demographic is bucking the trend: young men aged 16 to 24. According to Hubbub’s recent High Steaks report, this group is nearly three times more likely than the general population to have increased their meat intake in the past year. Over 40% eat meat daily, and a similar proportion express reluctance to cut back .

This divergence raises pressing questions: Why are young men consuming more meat amid growing awareness of its environmental and health impacts? And how can we address this trend without alienating those we aim to engage?

Meat and Masculinity: A Cultural Entanglement

The association between meat and masculinity is deeply ingrained. From barbecues to bodybuilding, meat has long symbolized strength, virility, and dominance. The High Steaks report highlights that men are 50% less likely than women to identify as vegetarian or plant-based. Younger men, in particular, often feel uncomfortable eating plant-based meals in social settings, fearing judgment or emasculation .

This discomfort isn't merely anecdotal. Social norms and peer perceptions play significant roles in dietary choices. The stereotype of the "soy boy"—a derogatory term used to belittle men who consume plant-based diets—exemplifies the stigma attached to plant-based eating among certain male groups .

The Manosphere's Influence: Online Echo Chambers

A significant factor amplifying meat consumption among young men is the "manosphere"—a network of online communities promoting traditional masculine ideals. Influential figures like Joe Rogan, Jordan Peterson, and Andrew Tate advocate for carnivorous diets, often touting unverified health benefits and downplaying environmental concerns. Their messages, disseminated through podcasts and social media, reach millions, reinforcing the notion that real men eat meat.

These narratives are not just about diet; they intertwine with broader themes of identity, resistance to perceived societal changes, and a reclamation of traditional male roles. The manosphere often positions plant-based diets as antithetical to masculinity, further entrenching dietary habits among its followers.

Protein Pressures and Misconceptions

The fitness industry's emphasis on protein intake has also contributed to increased meat consumption. Young men, striving for muscular physiques, often believe that high meat intake is essential for achieving their goals. However, nutritionists like Federica Amati note that many set unnecessarily high protein targets, leading to overconsumption of meat and potential health risks .

This protein obsession is fueled by marketing strategies that label various products—from milkshakes to snack bars—as "high-protein," reinforcing the idea that more protein equates to better health and fitness outcomes.

Health and Environmental Implications

The surge in meat consumption among young men has both health and environmental repercussions. Diets high in red and processed meats are linked to increased risks of colorectal cancer, heart disease, and other health issues. Environmentally, livestock farming is a significant contributor to greenhouse gas emissions, deforestation, and water pollution.

Despite these concerns, misinformation proliferates. Some influencers claim that meat consumption has negligible environmental impact, contradicting scientific consensus. This misinformation hampers efforts to promote sustainable dietary choices.

Reframing the Narrative: Strategies for Change

Addressing the rise in meat consumption among young men requires nuanced strategies that resonate with their values and identities:

- Align Plant-Based Eating with Masculine Ideals: Position plant-based diets as conducive to strength, endurance, and peak performance. Highlight athletes and fitness enthusiasts who thrive on plant-based nutrition.

- Leverage Trusted Influencers: Collaborate with male influencers who embody the desired traits and advocate for plant-forward diets. Their endorsement can challenge existing stereotypes and normalize alternative dietary choices.

- Promote Familiar and Appealing Plant-Based Options: Introduce plant-based meals that resemble traditional favorites, such as burgers or curries, making the transition less daunting.

- Focus on Personal Benefits: Emphasize immediate, tangible benefits like improved recovery times, enhanced energy levels, and cost savings, rather than solely environmental or ethical arguments.

- Create Supportive Environments: Encourage gyms, sports clubs, and educational institutions to offer and promote plant-based options, making them accessible and socially acceptable choices.

Conclusion: Towards Inclusive and Sustainable Eating Habits

The intersection of diet, identity, and misinformation presents a complex challenge. However, by understanding the underlying motivations and societal pressures influencing young men's dietary choices, we can craft messages and interventions that resonate. Promoting plant-based eating as a path to strength, health, and authenticity, not as a departure from masculinity, can pave the way for more inclusive and sustainable food systems.

Applejuicification: why consumers deserve clearer juice labels

Walk down any supermarket juice aisle and you're greeted with an abundance of options: berry bursts, exotic blends, antioxidant elixirs. But beneath the vibrant names and premium prices, a single, often unacknowledged ingredient dominates: apple juice.

This quiet dominance—now referred to as applejuicification—reflects a wider systemic issue in the food industry, where one affordable fruit juice is used as the primary base in countless “mixed” beverages, without clearly informing consumers.

Apple juice is not inherently problematic. But its role as a hidden filler raises legitimate concerns about labeling transparency, nutritional perception, and consumer trust.

A Brief History of Applejuicification

Understanding how we arrived here requires looking back at the economic and regulatory forces that shaped today’s juice industry.

1970s–1980s: Industrial Apple Juice Goes Global

The groundwork for applejuicification was laid in the late 20th century. Advances in juice concentration technology made it possible to process, store, and ship apple juice in bulk at low cost. At the same time, global production—especially in the United States, China, and Poland—expanded rapidly, creating a surplus of apple juice concentrate.

As apple juice became one of the most affordable and stable juice products available, it quietly became a commodity ingredient. Its mild flavor and light color made it the ideal foundation for blending.

1990s–2000s: Exotic Fruits, Familiar Base

As health-conscious and adventurous consumers began seeking new flavors—acai, pomegranate, passionfruit—the juice market responded with “exotic blends.” However, sourcing these fruits at scale posed logistical and financial challenges.

To meet demand while keeping prices low, manufacturers began using apple juice as the bulk ingredient, blending in small amounts of the more expensive or harder-to-source fruits. Often, the front of the label would emphasize these minor ingredients, while the back quietly listed apple first.

This marked a turning point: a shift from honest representation of juice content to strategic formulation designed around marketing potential.

2010s–Today: A Quiet Industry Norm

By the 2010s, applejuicification was no longer a workaround—it was standard practice. Juice blends that contain over 60% apple juice are common across supermarkets globally. In some jurisdictions, lax labeling laws allow any fruit to be named or pictured on packaging, even if it makes up less than 1% of the total volume.

While not technically deceptive under current regulations, this practice contributes to a misleading perception of choice and nutrition. The vast majority of shoppers remain unaware that their “berry boost” is mostly apple.

The Transparency Gap

The key issue with applejuicification is not the use of apple juice itself—it’s the lack of clarity about how much is used and what role it plays in the product. Marketing language like “superfruit blend” or “antioxidant fusion” suggests a health-forward, fruit-diverse product. In reality, these are often apple juice concentrates with flavorings or trace purées added for effect.

This gap between perception and reality raises three key concerns:

1. Misleading Nutritional Expectations

While apples are nutritious in whole form, apple juice—especially from concentrate—is high in sugar and low in fiber. When it replaces nutrient-rich fruits like pomegranate or blueberry, the overall health profile of the product drops. Apple juice still provides important vitamins and minerals.

2. Distorted Consumer Choice

When nearly every mixed juice on the shelf uses the same base, the variety is illusory. It becomes harder for consumers to make meaningful choices based on taste, health benefits, or ethical considerations.

3. Unjustified Premium Pricing

Products marketed with exotic fruit identities often command higher prices, despite containing very little of those fruits. In many cases, consumers are paying more for marketing than for ingredients.

A Case for Reform

This is not an isolated issue. It reflects a broader need for food labeling systems that prioritize consumer understanding over commercial flexibility. There are a few practical steps regulators and manufacturers could take:

- Mandate percentage disclosure for all featured fruits on front-of-pack.

- Restrict fruit imagery and naming to reflect actual ingredient proportions.

- Encourage voluntary transparency from brands that value consumer trust.

How to Spot Applejuicification

- Flip the bottle. Ingredients are listed by quantity. If apple or grape juice comes first, that’s your base.

- Look for percentages. Ethical brands are beginning to list fruit content clearly. A bottle that says “contains 3% pomegranate” is telling you something important.

- Watch for vague terms. “Fruit juice blend,” “tropical fusion,” or “with antioxidants” often signal more marketing than meaningful nutrition.

Apple Juice Isn’t the Problem, But Obscured Information Is

It’s important to emphasize: apple juice is not the villain here. It’s a versatile, widely enjoyed product with a long history in many households. But its overuse as a silent filler—coupled with misleading branding practices—raises valid questions about how food is sold, perceived, and trusted.

In a time when consumers are more conscious of health, sustainability, and ingredient sourcing, the burden should not fall on them to decode fine print. Labels should speak clearly. Ingredients should reflect the front of the package. And pricing should correspond to what’s inside.

It’s also not just ingredients that are prone to false marketing. Living conditions for farmed animals are also often misleadingly advertised. Greater transparency in the food system must be wide-reaching, not just restricted to certain products.

Applejuicification is a symptom—not just of profit-driven formulation, but of regulatory gaps that allow marketing to outpace meaning. The solution isn’t to vilify apple juice, but to demand that juice bottles tell the full story.

No, you don’t need to avoid feeding your kids whole grains.

A lot of the evidence supporting whole grains comes from observational cohort studies, which show strong associations but cannot alone prove cause and effect due to potential confounding. That being said, cohort studies track large groups over time, revealing long-term associations between diet and health outcomes in real-world settings. This makes them valuable for shaping population-wide public health guidelines. These findings are also bolstered by increasingly robust randomized controlled trials (RCTs) that support metabolic benefits in both short- and longer-term settings.

❌ Claim: Whole grains promote weight gain and spike blood sugar, contributing to future obesity.

Fact-Check: ✅ Whole grains are not associated with weight gain; they may help prevent it.

Multiple long-term studies have observed that increased consumption of whole grains was inversely related to weight gain in both men and women, and associated with a lower risk of obesity (source, source). Following these findings, the researchers concluded on the importance of clearly distinguishing between refined and whole grains for weight control (source). This supports the recommendation found in nutritional guidelines to favour whole over refined grains.

✅ Whole grains may help control appetite and reduce hunger.

Conclusions from meta-analyses support the above observation that consumption of whole grains may help with weight loss objectives, especially over time. These studies found that whole grains reduce subjective hunger and increase satiety more effectively than refined grains. This effect may help regulate calorie intake and support healthy weight maintenance (source, source).

✅ Whole grains might support healthier blood sugar and insulin responses.

Many studies have found that diets rich in whole grains can improve how the body responds to insulin, especially when compared to refined grains. This has been seen in both short-term clinical trials and long-term population studies (source, source, source). A large 2023 review found that whole grains lowered blood sugar spikes after meals and slightly improved long-term blood sugar control (HbA1c), though they didn’t affect fasting insulin levels.

It is important to note that not all studies agree (source). However, there does seem to be a consensus on the overall positive effects of consuming whole grains on human health, including a reduced risk of developing type 2 diabetes. The biological mechanisms leading to these positive effects are where some researchers appear to diverge.

This recent meta-analysis addressed those gaps by combining 10 cohort studies and 37 RCTs. It found a “significant beneficial effect of whole grain consumption on glycemic control and reducing type 2 diabetes risks.”

It is also worth noting that some of the most robust trials on whole grains and blood sugar control have been conducted in people with type 2 diabetes, who are more likely to show measurable improvements in glycaemic markers. While these results may not apply equally to everyone, they support the biological mechanisms by which whole grains can help regulate blood sugar, effects that likely benefit most people over time, especially when replacing refined carbohydrates.

The bottom line is that whole grains aren’t just “sugar in disguise.” While not a cure-all, replacing refined carbs with whole grains can help support weight control and health, especially as part of a balanced, nutrient-dense diet.

✅ In children, the evidence is limited but not supportive of harm.

In children specifically, there is a lack of long-term data on the subject. However, the current evidence does not support that whole grains promote weight gain or harmful blood sugar spikes. In fact, they are associated with better appetite control, lower long-term weight gain, and potentially improved insulin sensitivity. When studies show that typical intakes of whole grains among children (and adults) is low (source, source), advising parents to avoid whole grains contradicts the available scientific evidence on whole grains and could undermine healthy eating habits.

What do nutritional guidelines say about whole grains?

In the UK, the recommendation is to make starchy foods just over a third of what we eat, and to favour whole grains (source). In the U.S., MyPlate replaced the Food Pyramid and recommends making half your grains whole grains (source).

What does this mean for public health?

While individual RCTs are sometimes small or short-term, the combination of consistent large-scale cohort data and supportive clinical trials gives a high degree of confidence that swapping refined for whole grains helps with satiety, weight control, and blood sugar management, especially at the population level. Longer, more diverse interventions are still needed, particularly in children.

What about fruit juice and flavoured yoghurt?

With both of these, the key point is that moderation matters. While high consumption of juice may contribute to excess calorie intake and slight weight gain in young children, moderate amounts (as per pediatric guidelines) do not cause obesity and in some cases can improve nutrient intake (source). This is in the context of 100% fruit juice, and of a healthy diet rich in nutrient-dense foods.

One issue here might be the wide variety of juice and yoghurt products. It is undeniable that in a context where the majority of the population consumes too much sugar (especially free sugars), moving from flavoured to plain yoghurt is a good choice. That being said, it is also important to ask the question: what snack is that flavoured yoghurt replacing? According to the authors of a 2014 review on yoghurt consumption, “even when sugar is added to otherwise nutrient-rich food, such as sugar-sweetened dairy products like flavored milk and yogurt, the quality of childhood and adolescent diet is improved. However, if sugars are consumed in excess, deleterious effects may occur.”

Final Take Away

To be clear, promoting healthy eating patterns among children is an extremely important endeavour. In his caption, Dr. Berg also notes that “Healthy meals for children should include proteins, vegetables, fruits, nuts, and seeds.” This is indeed an important message, and focusing on these foods while minimising ultra-processed, nutrient-poor foods is highly beneficial to reduce rates of obesity among children.

So what’s the issue? Problems arise when certain foods are demonised because they don’t fit with one specific diet plan. Why? Because the narrative that this messaging taps into risks eroding trust in well-established nutritional guidelines, or in the expertise of health professionals, which can undermine public health efforts to tackle the very problem Dr. Berg is referring to: childhood obesity.

Eric Berg, D.C. is a well-known promoter of the keto diet, with an emphasis on nutrient-dense foods. The keto diet (short for ketogenic) is a very low-carbohydrate, high-fat diet designed to shift the body into a state of ketosis, where it burns fat for fuel instead of carbs. Because whole grains are naturally high in carbohydrates, they don’t fit within the standard keto framework.

A well-planned keto or low-carb diet can indeed be nutrient-dense and offer health benefits for some people. But it’s important to plan these diets with the help of a health professional to avoid nutrient deficiencies.

The purpose of public health nutrition is to offer guidance for the entire population. That means taking into account factors like accessibility, cultural preferences, affordability, nutritional adequacy, and environmental sustainability. Whole grains have an important, positive role to play in such a framework.

When we start demonising individual foods because they don’t fit one specific diet model, we risk encouraging fear-based decisions and more importantly undermining trust in nutrition science, thus making it harder for public health efforts to reach the people who need them most.

We have contacted Eric Berg, D.C. and are awaiting a response.

This article was updated on July 21 to incorporate user feedback and provide more detail on the types of evidence cited, including their relevance for shaping public health guidelines.

Disclaimer

This fact-check is intended to provide information based on available scientific evidence. It should not be considered as medical advice. For personalised health guidance, consult with a qualified healthcare professional.

Before moving to the specific claims made in this post, let’s outline the underlying assumptions that tie together the different parts of Eric Berg’s argument:

- Things that are commonly considered healthy (like juice, flavoured yoghurt, and whole grains) are actually harmful.

- Sugar is sugar, no matter where it comes from.

In the caption, Eric Berg, D.C. claims that “there are a lot of so-called ‘health foods’ out there for kids that are actually just junk.” That’s the thread tying together the foods in this post: junk, disguised as health. This is actually a common theme on social media: just a few weeks ago, Gary Brecka released an episode of the Ultimate Human Podcast titled “Why Your ‘Healthy’ Food Is Actually Harmful.” As a result, many social media users regularly post comments expressing concern and overwhelm when it comes to food choices, and who to trust.

This raises the question: are whole grains really as ‘harmful’ as juice or flavoured yoghurt, or are we comparing unlike things?

It’s important to tackle these two assumptions up front, because they obscure the real problem. This kind of narrative distracts from what actually matters: it risks focusing on the wrong enemy.

A lot of major nutrition guidelines are being reworked to promote both human and planetary health, and a major focus is shifting people away from refined grains and toward whole grains. So when posts like this suggest that whole grains are no better than any other food that produces glucose, they can sow confusion and erode trust in public health efforts.

The difference is, most nutritional guidelines don’t group flavoured yoghurt and whole grains together. They don’t encourage high consumption of products with added sugar (including flavoured yoghurts) but they don’t suggest you need to avoid them completely either.

Marketing, not nutrition policy, is what makes ultra-processed, sugary products look like healthy choices. It’s marketing that sticks “high protein” on a yoghurt that’s also loaded with sugar. These are the real problems we need to tackle, not a fundamental misunderstanding of what sugar is or where it comes from.

Indeed the enemy is also not sugar. Yes, we know that diets that are high in sugar are associated with negative health outcomes (source, source). And yes, consuming whole grains produces glucose, but this is not something to be feared inherently. Why? Because whole grains contain fibre, vitamins, and minerals that slow digestion and moderate blood sugar responses, which is why many whole grains have a low to medium glycaemic index. Similarly, fruits deliver natural sugars alongside fibre and antioxidants. Finally, the way we combine foods in meals, including fat, protein, and fibre, further shapes how our blood sugar responds. So no, it’s not as simple as saying “sugar is sugar.”

So, what exactly are whole grains, and what does the evidence say about whole grains and their effects on weight and blood sugar?

“Grains are considered wholegrain when the bran and germ of the grain is not removed during processing and refining. The bran and germ contain important nutrients that have benefits for our health. There is a breadth of evidence to support this advice. Analysis published in the Lancet took 185 prospective studies and 58 clinical trials combined to assess the markers of human health associated with carbohydrate quality. Higher consumers of wholegrains demonstrated a significantly lower risk of all-cause mortality (-19 per cent), coronary heart disease (-20 per cent), type 2 diabetes (-33 per cent), and cancer mortality (-16 per cent) compared with lower consumers. Similar results were found for fibre intake, indicating this may be the beneficial nutrient in wholegrains.” (The Science of Plant-Based Nutrition, p. 132)

Don’t fear foods just because they contain carbs: context matters. Whole grains come with fibre, vitamins, and minerals that slow digestion and support long-term health.

Eric Berg, D.C. recently shared a post on Instagram in which he advises against feeding children various drinks or foods such as juice, flavored yoghurt, and whole grains. He claims that these “can spike blood sugar and contribute to future obesity.” The main focus of this fact-check is on whole grains, to tackle the potential confusion which this post could cause, especially given the growing emphasis on whole grains in nutritional guidelines.

Claim: “Avoid serving your kids juice, flavored yoghurt, whole grains, and foods that promote weight gain, as these can spike blood sugar and contribute to future obesity. If consumed at all, they should be limited to very small amounts.

In fact, they are consistently linked to better appetite control, healthier weight, and a reduced risk of type 2 diabetes when consumed as part of a balanced diet.

Many reputable organisations, including the British Dietetic Association, highlight the important role of whole grains in a healthy diet. When social media posts portray them as no better than sugary snacks, confusion can easily grow. This fact-check examines the assumptions and reasoning behind such claims, to help readers move beyond broad categorisations that could cause unnecessary fear.

What does “grass-fed” really mean? The meaning behind the label

Many consumers choose grass-fed beef because they believe it’s more ethical, sustainable, or nutritious than conventional meat. The image is compelling: cows grazing freely in lush, open pastures. But in practice, the term grass-fed doesn’t always align with that vision. In this piece, I break down what grass-fed really means, uncover some common misconceptions, and offer guidance on how to make informed choices.

Is There a Legal Definition for “Grass-Fed” Beef?

Not anymore. The USDA once maintained a voluntary standard stating that grass-fed animals must be fed only grass and forage after weaning. But in 2016, the USDA withdrew its official definition and label standard, leaving approval in the hands of the Food Safety and Inspection Service (FSIS).

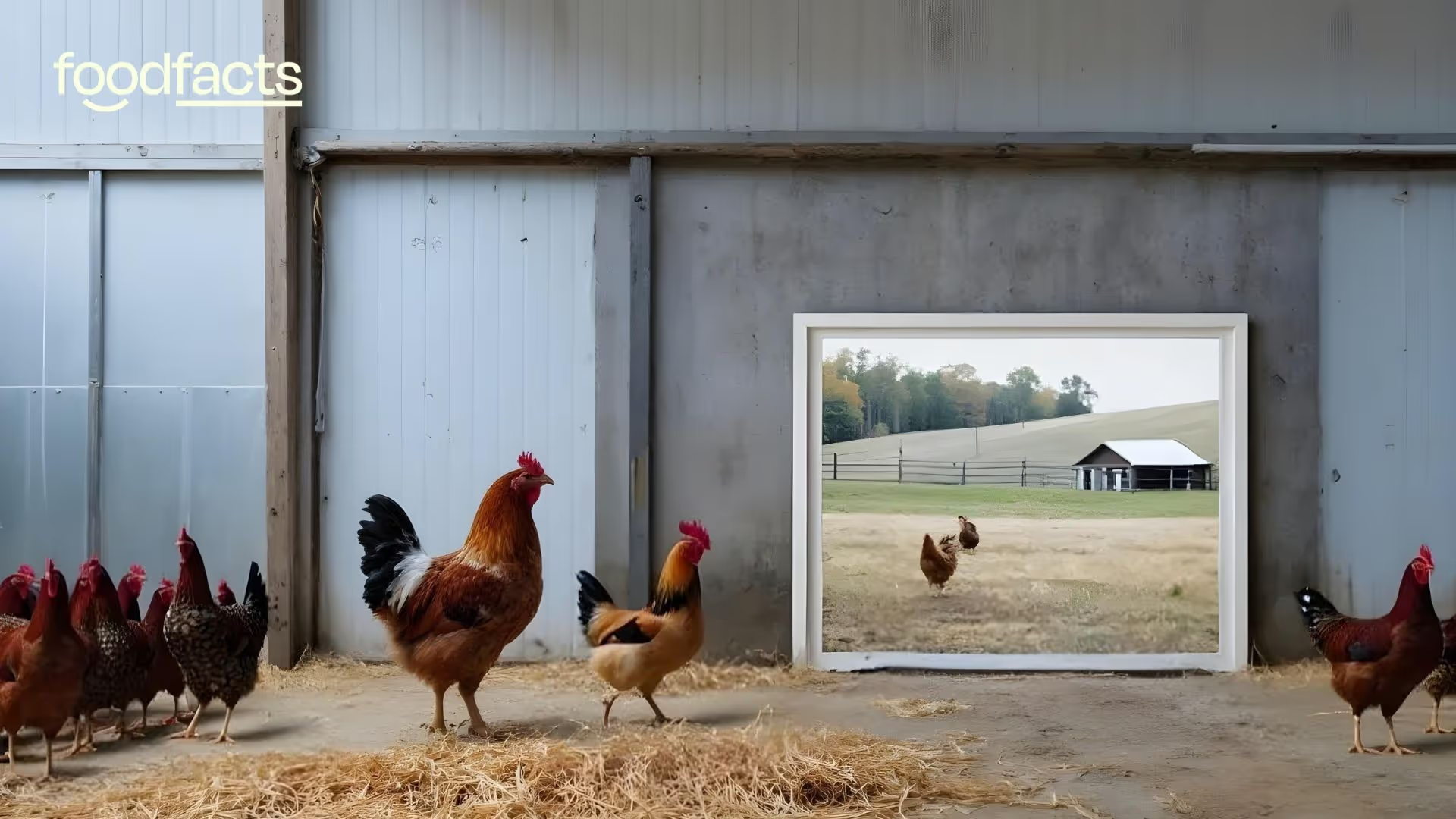

Now, individual producers define their own criteria for “grass-fed,” and FSIS simply reviews whether a label is “not misleading.” This has opened the door to a wide range of interpretations—from animals raised on open pasture to those kept in confinement and fed dried grass indoors. For consumers, this creates a significant trust gap between what the label suggests and what’s actually happening on the farm.

Do Grass-Fed Cows Really Eat Grass on Pasture?

Surprisingly, many do not. In colder or drier regions, or during winter, cows labeled as grass-fed are often kept indoors and fed grass in the form of hay, silage, or other forage crops. While this meets the dietary requirement, it falls short of the image of cows grazing freely in natural fields. Farmers explain that they keep cows indoors during winter to protect them against the cold climate, give the pasture a chance to recover, ensure adequate supplies of food, and improve manure management. However, a growing number of farmers are keeping cows indoors all year round.

As many producers rely heavily on confined feeding of forage, especially during finishing (the final months before slaughter), these animals may only spend a small amount of their life on actual pasture.

This distinction matters—not just for the animal’s experience, but also for environmental and welfare outcomes. The idyllic pasture image comforts consumers, but it doesn’t reflect the more industrial reality behind many products.

Is Grass-Fed Beef Better for the Environment?

This is where things get even more complex. While it’s often assumed that grass-fed cattle are better for the planet, the data paints a more nuanced picture.

Grass-fed cows generally take longer to reach slaughter weight compared to grain-fed cattle. That extended lifespan leads to more methane production—a potent greenhouse gas. High-forage diets can significantly increase methane emissions, and other research shows that the carbon footprint of grass-fed beef can be up to 42% higher than that of grain-fed beef when factoring in land use, emissions, and water consumption.

So while grass-fed systems may avoid the concentrated waste and pollution of feedlots, they can carry other ecological costs—especially when scaled to meet demand.

It also depends on where the cows are being farmed. If the land is already pasture, then there is a stronger argument in support for grass-fed cows, yet research shows that simply allowing these areas to rewild free of livestock is the best thing we can do for the environment. However, if companies are cutting down lush and biodiverse parts of rainforests in order to grow grass to claim that they have grass-fed beef, we can clearly see that not farming cows at all is the more (and most) sustainable option.

Is Grass-Fed Beef More Humane for Animals?

Many people assume that grass-fed animals live happier, more natural lives—but the label itself offers no guarantees about animal welfare. It only describes diet—not space, social interaction, or quality of life.

Without robust third-party oversight, “grass-fed” animals could be confined in crowded barns, with minimal enrichment and no guaranteed access to the outdoors, especially during the finishing stage of production. The key distinction here is between grass-fed and pasture-raised, and even that difference isn’t always clear on product labels.

If you want to eat beef that comes from higher-welfare systems, look for certifications that include both feeding and living standards—such as Certified Grassfed by A Greener World (AGW), which requires continuous pasture access, no feedlots, and strong welfare criteria. However, beef that comes from these systems often results in higher emissions, as the cows take longer to reach slaughter weight and have greater land use. Cows farmed according to practices of regenerative grazing can contribute to improved soil health and carbon sequestration. Of course, the best way to ensure high animal welfare and minimal environmental impact is not to put animals in farms at all.

Why Do People Think Grass-Fed Is Healthier and More Ethical?

Marketing is a big reason. Food producers know that consumers associate grass-fed with natural, ethical, and environmentally friendly farming. That perception boosts sales and justifies premium pricing.

But without strong regulations or standardized definitions, the term has become a feel-good label that may not reflect meaningful differences in farming practices. Consumers often overestimate what the label guarantees, trusting imagery that rarely matches reality. This imagery is also used for meat produced from fully-indoor farms, so this issue of false marketing is not confined to just grass-fed cows.

This is why transparency matters. Ethical food systems depend on honest communication—especially when it comes to animal welfare and sustainability.

"Grass-fed beef is often marketed as a win-win, but it's a tradeoff at best—slightly better welfare in exchange for far worse land use and methane emissions. The industry uses this narrative to greenwash a destructive system and delay change. It's cultural spin, not an environmental or food security solution. The real solution is shifting to plant-based foods - and systemically making it easier for everyone to choose these better options - that truly protect animals, ecosystems, and our future." - Nicholas Carter

How Can I Ensure Good Welfare And A Low Environmental Impact?

Unfortunately, there is no silver bullet here. The highest welfare cows have the highest environmental impact, as they require more land and take longer to reach slaughter weight.

On the other hand, cows with the lowest environmental impact are often those in the very worst farming conditions, either tethered indoors to produce milk, or spending most of their lives cramped into feedlots.

Bearing this in mind, the best thing we can do as active consumers is:

- Call for greater transparency in the food system, helping to reduce the misleading nature of food labels

- Demand greater enforcement of greenwashing legislation, especially against meat and dairy companies, which have some of the highest environmental impacts on the earth

- Incorporate a greater amount of plant-based foods into your diet. Plant-based foods almost always have a smaller environmental impact than meat and dairy.

Should I Trust the Grass-Fed Label?

It depends on what you expect from it. If you’re looking for beef and milk from animals fed a forage-based diet, the label often delivers. But if you're expecting open pastures, humane treatment, and lower environmental impacts, the picture is much less clear.

As more consumers ask questions and demand higher standards, there’s hope for a more honest food system—one where marketing matches reality, and where food choices can genuinely reflect our values.

Is acrylamide the most dangerous ingredient in your food?

Claim 4: "This isn't fear-mongering, it's a real risk that most people don't know exists."

By definition, this claim cannot be fact-checked without knowing exactly the impact of this post on its audience. However, it is possible to comment on its potential to trigger fear.

Acrylamide is indeed something to be aware of, and reducing exposure, by not overcooking starchy foods, toasting bread lightly, and eating a varied diet, is smart. But it's equally important to put the risk in perspective. To do so, we need to dive deeper into risk perception: how do we understand and perceive risks?

Why perception of risk gets distorted… and why it matters

Experts and scientists evaluate risk very differently from the average person. For experts, risk is usually based on probability, dose, exposure, and strength of evidence. But for most of us, risk perception, especially when dealing with unfamiliar topics, is often shaped by mental shortcuts called heuristics. These shortcuts aren’t inherently bad; in fact, they are very helpful to make quick decisions without getting overwhelmed. Heuristics are often grounded in generalisations, and they can lead to accurate predictions, and sometimes good decisions. But they also often generate false or irrational conclusions, and it’s important to understand how this can happen.

Two heuristics play a big role here:

- The availability heuristic leads us to judge a risk as more serious or likely if it’s easy to recall. On social media, we might see repeated warnings about “hidden toxins” in food, or about unfamiliar chemical processes quietly and slowly killing us. Even if these risks are small or poorly understood, the more we see them, the more real and threatening they feel.

- The affect heuristic makes us rely on our emotions, especially fear, when judging risk. Scary phrases like “DNA damage,” “potent neurotoxin,” or “linked to cancer,” alongside images of lab suits or hazardous labels, can trigger feelings of dread. Suddenly, something like acrylamide can feel like an immediate personal danger, regardless of the actual exposure levels or of the context in which it is known to be harmful.

Importantly, the issue isn’t this one post about acrylamide. The problem lies in the broader narrative it taps into: a repeated, emotionally charged narrative that implies we’re constantly being poisoned by ordinary foods, and that no one is telling us the truth. Over time, this narrative erodes our ability to judge risk accurately and proportionally. Even when facts are later introduced, like clarifying that alleged cancer links are based on high-dose animal studies, those emotional impressions are hard to undo. Emotions have been shown to play an important part in risk perception, and this can even happen when we come to a different, more rational cognitive assessment (source).

That’s why it’s so important to raise awareness of how we perceive risk. If we understand these patterns, we can pause before reacting, and evaluate food safety claims with the full context they deserve. Just because solutions are provided, does not mean that there is no fear. In fact, the more likely damage comes from repeated exposure to similar content, which could lead to toxic relationships with food. Distorted risk perception can easily make us lose sight of the big picture. For example, by overly focusing on a single risk, we might forget the role played by one’s overall diet in counteracting that risk.

Final thoughts for you to consider

Let’s finish this fact-check by taking a quick look at the solutions provided by Eric Berg to protect oneself from acrylamide exposure. Eric Berg recommends to reduce high-heat cooking, cook with saturated fats like tallow, butter or coconut oil, eat cruciferous veggies, sip green tea and enjoy spirulina.

Reducing high-heat cooking, for example by lightly toasting rather than ‘burning’ toast, is indeed encouraged. However, the rest of those tips do not reflect the general recommendations provided by health authorities. This isn’t because they are necessarily wrong; rather there might be a lack of supporting evidence, or they might distract from the bigger picture.

For example, cooking fat type may influence acrylamide levels slightly, but using saturated fat isn’t advised by the EFSA (European Food Safety Agency) or by the FDA (U.S. Food and Drug Administration). This is because of broader health concerns related to the higher consumption of saturated fat (source).

This is a good reminder that distorted risk perception can get us to lose sight of the big picture. Moving onto the use of green tea, most of the studies demonstrating green tea’s protective effects against acrylamide were conducted in animal models using controlled doses of acrylamide that often exceed typical human dietary exposure. In these experiments, green tea extract was administered daily, sometimes in concentrated doses, and showed improvements in biomarkers of liver, kidney, neural, and reproductive health (source, source). However, most people are not exposed to acrylamide levels as high as those used in these studies. So while there is nothing wrong with sipping green tea, these measures do not reflect the advice given by health authorities, which instead focuses on variety in cooking methods, and in the diet itself:

- Limiting certain cooking methods, in particular frying and then roasting. For example, boiling and steaming do not produce acrylamide. Soaking raw potato slices in water before frying can also help;

- Toasting bread to a lighter colour.

Finally, while this might not make for compelling social media content, the EFSA reminds us of the importance of a balanced diet, which “generally reduces the risk of exposure to potential food risks. Balancing the diet with a wider variety of foods, e.g. meat, fish, vegetables, fruit as well as the starchy foods that can contain acrylamide, could help consumers to reduce their acrylamide intake” (source).

We have contacted Eric Berg and are awaiting a response.

Disclaimer

This fact-check is intended to provide information based on available scientific evidence. It should not be considered as medical advice. For personalised health guidance, consult with a qualified healthcare professional.

Claim 2: "It's called acrylamide, a class A carcinogen according to the EPA."

Fact Check: This wording could be confusing. The U.S. Environmental Protection Agency (EPA) classifies acrylamide as a Group B2 "probable human carcinogen", while a “Class A” carcinogen would denote a known human carcinogen (source).

Similarly, the International Agency for Research on Cancer (IARC) lists acrylamide as a probable human carcinogen (Group 2A) (source). In an editorial published in the American Journal of Clinical Nutrition, researchers note that “the body of evidence [on the links between acrylamide and cancer risk] is still cloudy, even after 20 years of research,” explaining why this classification has not been updated.

As more research is needed, health agencies recommend minimising exposure as low as reasonably achievable. In her book The Science of Plant Based Nutrition, Registered Nutritionist Rhiannon Lambert explains that for example, “compared to cooking in oil, air frying helps to lower levels of acrylamide.” Importantly, she also reminds us that “it is very unlikely that we are consuming enough acrylamide to cause [the development of cancer]”.

Claim 3: "Acrylamide is a potent neurotoxin."

Fact Check: At high doses, particularly in industrial or occupational settings, acrylamide has been shown to cause nerve damage. This has been well-documented among workers exposed to large quantities (source).

However, the amounts found in food are typically far lower. Therefore, the claim lacks context as it fails to make that distinction, which has been shown to be significant (source). In a literature review on the neurotoxicity of acrylamide, researchers concluded that:

“It is clear that acrylamide is neurotoxic in animals and humans. The neurotoxic effects, however, seem to be only a problem in humans with high-level exposure. The lower levels of exposure estimated from dietary sources are not associated with neurotoxic effects in humans, but further studies are needed on low-level chronic exposures to determine cumulative effects on the nervous system.”

Claim 1: "The most dangerous ingredient in your food isn't even on the label... it's a by-product quietly formed when certain foods are cooked."

Fact Check: Acrylamide is indeed not listed on food labels. That's because it's not an added ingredient. As Eric Berg states here, it's a by-product that forms naturally during high-temperature cooking (above 120°C or 248°F), particularly in starchy foods like potatoes and grains.

This occurs during the Maillard reaction, a chemical process responsible for browning and flavour. The reaction involves the amino acid asparagine and reducing sugars, such as glucose or fructose (source). While the label doesn’t mention acrylamide, its formation is known to scientists and food safety authorities, which assess the potential risks for public health and work with food manufacturers to reduce acrylamide in food (source).

Calling it the “most dangerous (ingredient)” seems sensationalist, as simple measures like avoiding burning toast for example can reduce consumer exposure. While Eric Berg does offer solutions such as reducing high-heat cooking, the framing of this post can significantly affect the public’s risk perception, an important issue we’ll come back to in detail at the end of this fact-check.

There’s no need to panic about acrylamide. Most of the fear-based information you see online comes from animal studies, which test extremely high doses far beyond what people would ever consume. These studies are important for identifying potential risks but don’t directly reflect how humans eat or process acrylamide.

Unfortunately, this important nuance is frequently ignored by unqualified wellness influencers, such as Eric Berg, who’ve gained popularity by promoting fear-based messaging and frequently distorting science to spread misinformation.

Decades of toxicological research and ongoing evaluations by international health authorities, including the World Health Organisation and the European Food Safety Authority, show that the levels of acrylamide typically found in food are far below those linked to harm in animal studies. Human research has not found clear evidence that dietary acrylamide causes health problems. Based on current data, global experts agree that acrylamide in food is safe when consumed as part of a balanced and varied diet.

Avoid emotional language: Sensational or emotionally charged wording can distort risk perception and make it harder to see the bigger picture.

In a recent Instagram post, Eric Berg shares information about acrylamide, which he claims is lurking in everyday foods, and that you won’t even find on food labels. While this might sound scary, Eric Berg says this is not fear-mongering and shares several tips, from reducing high-heat cooking to sipping green tea. Let’s break down and fact-check some of the key claims made in this post.

Acrylamide is a naturally occurring compound that forms when certain foods are cooked at high temperatures. Its potential health risks have been, and continue to be studied extensively, mostly in animal models using high doses not typically encountered in human diets. Although reducing acrylamide exposure is a valid public health recommendation, especially by avoiding overcooked or burnt foods, the post’s tone, combining alarming language and visuals, does not reflect what the evidence currently shows about dietary exposure in humans.

This kind of narrative oversimplifies complex issues and can undermine scientific literacy. Most people don’t assess risks the way scientists do. Sensational messaging makes for compelling content, but when it comes at the expense of understanding, our perception of risk is more likely to be shaped by mental shortcuts (known as heuristics), rather than by evidence.

This fact check goes beyond the facts on acrylamide. It explores how our perception of risk can be influenced by emotion, repetition, and framing. Because facts matter; but how we frame those facts matters too.

The carnivore diet: what does the data say about its impact on female health?

Final Take Away

Anecdotes can be powerful, especially in a fast-paced society where issues like fatigue are common, for example among women juggling many demands. This makes the promise of a diet that claims to be the ‘key’ to feeling better or solving a wide range of health problems especially appealing. However, this kind of messaging is a hallmark of nutrition misinformation. It oversimplifies complex issues, often attributing improvements to a single factor without considering other changes, such as reduced intake of ultra-processed foods high in fat, salt and sugar, or increased physical activity.

What’s missing in these narratives is evidence. Current research does not support claims that the carnivore diet improves hormonal health or leads to sustainable weight loss. It also overlooks potential long-term risks associated with such a restrictive eating pattern, such as risks we have explored in this article, including increased risk of cardiovascular disease and cancers.

Importantly, while influencers may say they "feel great" on this diet, subjective feelings don’t reveal hidden health issues, including the development of heart disease, which often has no early symptoms. Algorithms also tend to amplify success stories while suppressing negative experiences, creating a skewed perception of effectiveness.

Ultimately, to truly evaluate health claims, we must rely on high-quality scientific evidence, such as randomized controlled trials, clinical studies, and systematic reviews, not social media testimonials.

We have contacted Bella (‘Steak and Butter Gal’) and are awaiting a response.

Disclaimer

This fact-check is intended to provide information based on available scientific evidence. It should not be considered as medical advice. For personalised health guidance, consult with a qualified healthcare professional.

Claim 2: [‘Never Felt Better’]

Fact-Check: Steak and Butter Gal claims that women “go high-fat carnivore and feel the best they’ve ever felt”. Participants in the 2021 study following the dietary intake of individuals following a carnivore diet did report that their diet enhanced their general health, physical and mental well-being. But ‘feeling good’ does not equate to ‘being healthy’. Many serious health conditions, such as high blood pressure, atherosclerosis, osteoporosis, or even cancer, can develop silently, without noticeable symptoms. Moreover, this self-reported survey didn’t include clinical assessments like blood tests, scans, or long-term disease outcomes, so we can’t draw conclusions about the diet’s actual impact on long-term health.

It is well established that diets lacking in fruits and vegetables are consistently linked to a higher risk of various health conditions, including heart disease, certain cancers, and an increased overall risk of death (source).

Consuming large amounts of red and processed meats has also been strongly connected to heightened risks of several illnesses, such as colorectal, breast, and colon cancers, as well as cardiovascular disease (source 1, source 2). According to NHS guidance, individuals who consume more than 90 grams of red or processed meat daily, roughly equivalent to three thin slices of roast beef, pork, or lamb, should aim to reduce their intake to 70 grams per day to lower their health risks.

Claim 3: Carnivore diet and “amazing” hormonal health

Fact-check: It is important to note that the impact of the carnivore diet on hormonal health has not yet been properly researched. The claim that the carnivore diet is “amazing” for hormonal health is not backed by peer-reviewed human trials, and may ignore potential long-term risks, especially given what we know about hormonal sensitivity to dietary patterns (source, source).

It’s also essential to recognise that each individual’s body responds differently to dietary changes, and there is no universal approach that works for everyone.

Claim 1: “I have seen women go high-fat carnivore [...] they lose weight effortlessly.”

Fact-check: an exaggerated claim

The carnivore diet is typically high in fat. One of its well-known advocates, ‘The Steak and Butter Gal,’ promotes a fat-heavy eating pattern. While some fat is essential, helping the body absorb fat-soluble vitamins like A, D and E, excess fat that isn’t used for energy or cell function gets stored in the body.

Fat, regardless of type (saturated or unsaturated), is calorie-dense, providing 9 kilocalories (37 kilojoules) per gram: more than double the energy found in proteins or carbohydrates, which offer 4 kilocalories (17 kilojoules) per gram (NHS).

Excessive intake of saturated fat can elevate levels of LDL cholesterol, generally referred to as "bad" cholesterol, in the blood, therefore increasing the risk of cardiovascular diseases, including heart attacks and strokes. According to NHS recommendations, women should limit their saturated fat intake to no more than 20 grams per day. To illustrate, a single pound of ground beef, as featured in Steak and Butter Gal’s breakfast, contains approximately 51 grams of saturated fat.

The diet proposed by Steak and Butter Gal is a high-fat carnivore diet, therefore one low in carbohydrates, suggested to aid weight loss. However, there is limited scientific evidence to support that a high-fat diet leads to sustainable weight loss. In fact, a 2021 clinical trial found that in a 10 week intervention, a low-carbohydrate high-fat diet group did not achieve any favourable weight loss outcomes when compared to a normal diet.

The carnivore diet is extremely restrictive and may be difficult for many individuals to sustain over time. Similar approaches, like the ketogenic (keto) diet, have also been linked to weight loss claims but come with comparable limitations. Both diets cut out entire food groups, making them hard to stick to over time (source). Therefore, the claim that women on a carnivore diet lose weight ‘effortlessly’ is not representative, as this diet is in fact highly restrictive and unsustainable for most.

The major issue with these claims about the carnivore diet and women’s health is that they rely heavily on feelings and personal testimonials rather than solid evidence - likely because very little research is available, as is too often the case in women’s health. This creates a skewed picture that overstates potential benefits of the carnivore diet while ignoring the growing body of evidence showing that plant-rich diets are consistently linked to better health outcomes.

When encountering diet and nutrition content on social media, it's important to remain skeptical of claims that a single diet can solve all health problems. Evidence-based nutritional guidelines (NHS) support a balanced diet that includes a variety of foods in the right proportions to promote overall health and maintain a healthy weight.

On May 9th, lifestyle influencer known as ‘Steak and Butter Gal’ took to Instagram to credit her carnivore diet for a range of health benefits, including “amazing hormones, clear glowy skin, amazing hair growth, and zero bloat.” She went on to say, “I have seen women go high-fat carnivore and feel the best they’ve ever felt, they lose weight effortlessly.”

As many women increasingly turn to social media for advice on how to improve hormonal balance, skin and hair health, and manage weight, it becomes important to distinguish between credible health guidance and unverified personal anecdotes. This article will fact-check the claim that a high-fat carnivore diet can improve hormone function and promote effortless weight loss in women, using the best available scientific evidence.

The claims in this video rely solely on anecdotal evidence and lack scientific support. Research shows that high intake of saturated fat increases the risk of heart disease, while excessive consumption of red and processed meat is linked to negative health outcomes, including certain cancers. There is no conclusive evidence that a carnivore diet promotes weight loss or improves hormonal health. In fact, restrictive diets like this one can lead to adverse effects such as irregular or missed menstrual cycles and are often unsustainable, offering no unique benefits over other restrictive diets.

Women’s health, particularly when it comes to hormones, skin, hair, and weight, is often underserved in mainstream medical research and can be difficult to navigate. This lack of clear, accessible information leaves many women turning to social media influencers for guidance. But while personal anecdotes may sound convincing, they don’t carry much weight unless backed by solid scientific evidence. Misinformation can lead to decisions that negatively affect your health and wellbeing. That’s why it’s vital to examine claims like this one through an evidence-based lens, so you can make informed, safe, and effective choices for your body.

Latest fact-checks, guides & opinion pieces

.svg)